Human-in-the-Loop (HITL): Why AI Still Needs Humans

Human-in-the-loop systems combine AI speed with human judgment. Learn how HITL works, why AI needs oversight, and where it’s essential.

Artificial intelligence is incredibly good at processing data and spotting patterns at scale. But when decisions require judgment, accountability, and an understanding of context, AI still struggles. That limitation explains why many AI systems perform well in benchmarks but break down in real-world use. AI does not understand consequences. It makes predictions, not decisions. And when outcomes affect people’s health, finances, rights, or safety, accuracy alone is not enough.

This is where human-in-the-loop (HITL) systems come in. They are not a quick fix, but a core design approach that combines AI efficiency with human judgment to make real-world AI systems safer and more reliable.

The Core Problem With Fully Automated AI

AI systems are designed to optimize patterns in historical data. Humans, by contrast, evaluate intent, nuance, and accountability. When AI is left to operate independently in complex environments, several failure modes repeatedly appear:

Biased hiring algorithms that replicate historical discrimination

Medical misdiagnoses caused by opaque, black-box models

Autonomous systems that fail in rare but dangerous edge cases

A growing trust gap between AI outputs and real-world decision-making

These failures are not bugs. They are structural limitations of automation.

To bridge the gap between machine efficiency and real-world responsibility, organizations increasingly rely on AI human oversight, embedding people directly into AI workflows.

What Is Human-in-the-Loop?

Human-in-the-loop (HITL) refers to the integration of human judgment, review, and control into AI and machine learning systems. Instead of allowing AI to operate entirely autonomously, HITL systems ensure that humans train, guide, validate, correct, or override machine decisions at critical points.

In a human-in-the-loop AI system, intelligence is shared:

Machines provide speed, scale, and pattern recognition

Humans provide context, ethics, domain expertise, and accountability

This creates a continuous feedback cycle where humans improve AI models, and AI enhances human decision-making.

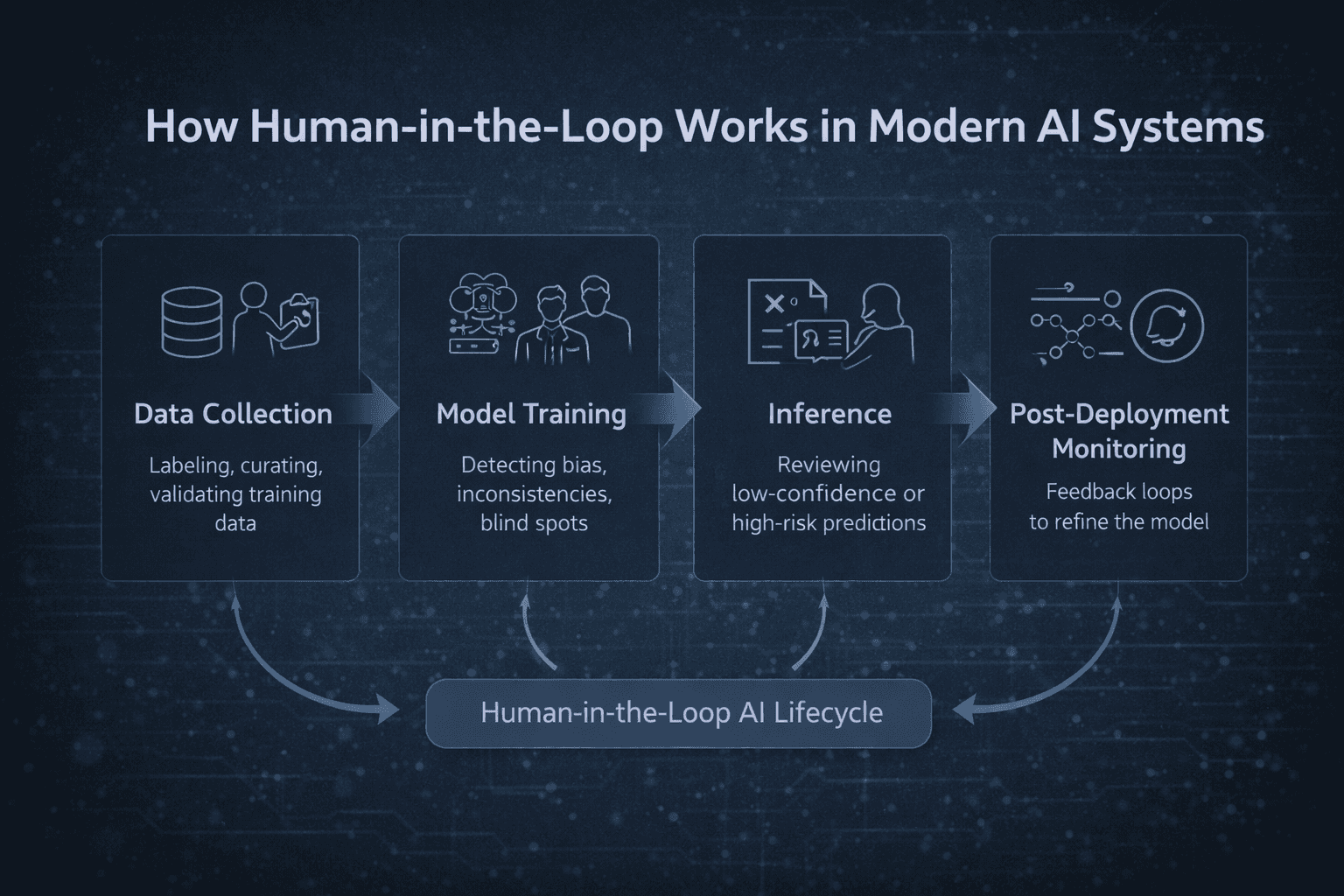

How Human-in-the-Loop Works in Modern AI Systems

In practice, HITL machine learning is not a single step. It is a system-level design approach that spans the entire AI lifecycle:

Data collection: Humans label, curate, and validate training data

Model training: Experts detect bias, inconsistencies, and blind spots

Inference: Humans review low-confidence or high-risk predictions

Post-deployment monitoring: Feedback loops refine the model over time

Human roles within these systems may include:

Reviewers validating AI outputs

Annotators improving training data

Decision-makers approving outcomes

Override authorities intervening when AI fails

Human-in-the-Loop in Theory vs Practice

Theoretically, HITL sounds simple: add a human reviewer.

Operationally, it is far more nuanced.

In real systems, human touchpoints are introduced in different ways:

Continuous involvement, where humans regularly review outputs

Exception-based intervention, triggered by confidence thresholds or anomalies

For example:

AI may flag medical scans, but radiologists make the final diagnosis

Chatbots handle routine queries and escalate complex cases to humans

Fraud models detect suspicious transactions that are verified by analysts

This balance is what makes human-in-the-loop automation both scalable and trustworthy.

Human-in-the-Loop vs Human-on-the-Loop vs Human-out-of-the-Loop

Not all AI systems require the same level of human involvement.

Human-in-the-Loop (HITL)

Humans actively participate in training, reviewing, and correcting AI decisions in real time.

Best for: High-stakes, ambiguous, or evolving problems

Pros: Accuracy, ethics, adaptability

Cons: Slower and more resource-intensive

Human-on-the-Loop (HOTL)

AI operates autonomously, but humans monitor outcomes and intervene when failures occur.

Best for: Time-sensitive systems where some errors are tolerable

Pros: Balance of speed and safety

Cons: Relies on fast failure detection

Human-out-of-the-Loop (HOOTL)

Fully automated systems with no operational human involvement.

Best for: Low-risk, rule-based tasks

Pros: Maximum scalability

Cons: Minimal accountability and high systemic risk

What Is Human in the Loop Automation?

Human-in-the-loop automation combines AI efficiency with structured human intervention. Humans do not control every step, but they are embedded at the decision points that matter most.

Common mechanisms include:

Confidence thresholds that trigger review

Anomaly detection for unusual inputs

Manual overrides for ethical or legal concerns

This approach enables semi-automation that is faster than manual workflows and far safer than full autonomy.

Real-World Use Case: HITL in Fraud Detection (Stripe)

A widely cited example of human-in-the-loop AI in production is Stripe Radar, which uses machine learning to detect fraudulent payments while allowing human review and feedback to continuously improve accuracy.

Stripe explicitly combines automated fraud scoring with human-driven validation and dispute feedback, reducing false positives without increasing financial risk.

This is a practical illustration of how HITL reduces risk while preserving scale.

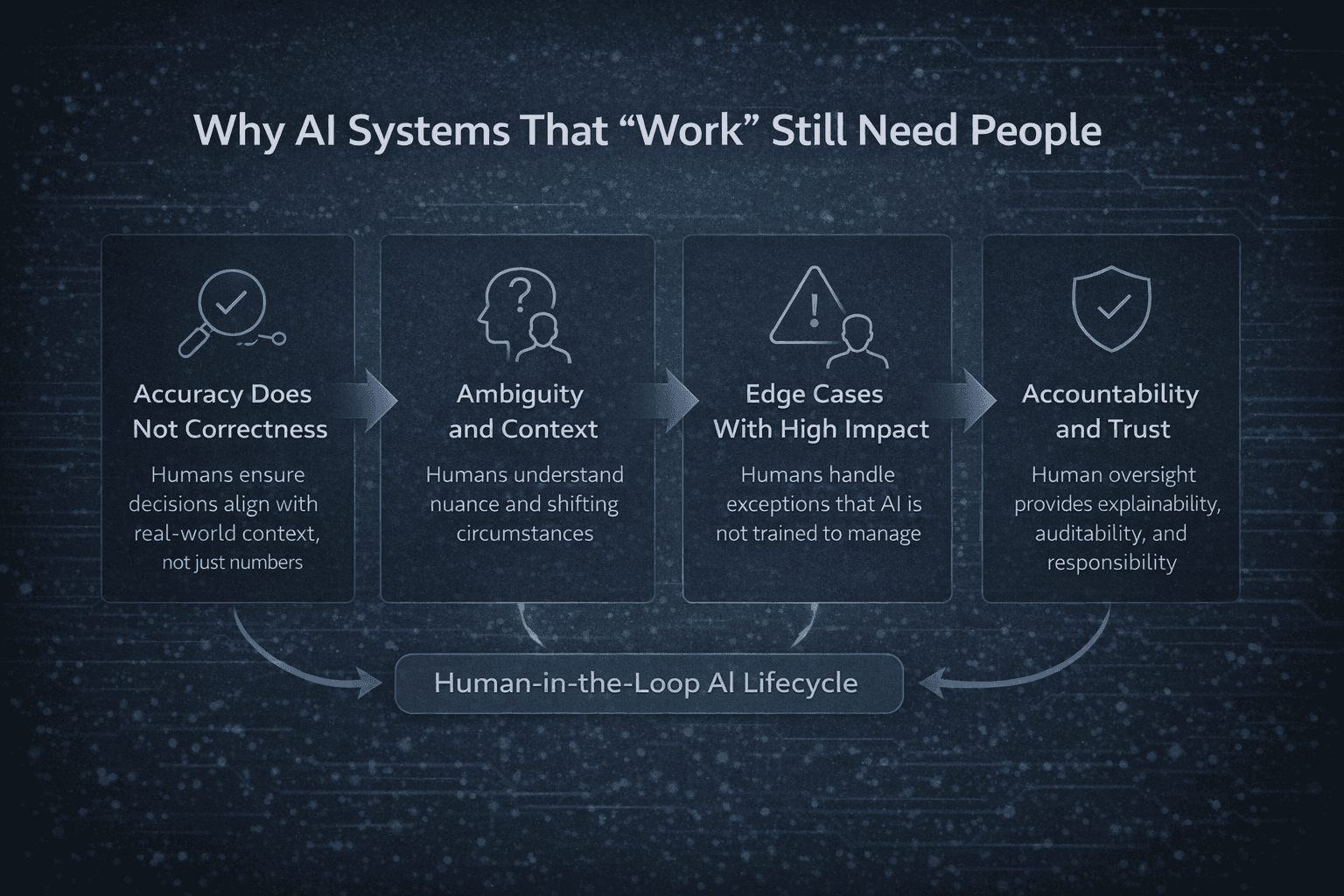

Why AI Systems That “Work” Still Need People

Accuracy Does Not Equal Correctness

AI can be statistically accurate and still wrong in context. AI decision validation by humans ensures decisions align with real-world consequences, not just metrics.

Ambiguity and Context

Humans interpret nuance, intent, and changing circumstances, areas where AI consistently struggles.

Edge Cases With High Impact

Rare scenarios carry disproportionate risk. Human oversight protects systems when models encounter unfamiliar situations.

Accountability and Trust

Fully automated systems lack clear responsibility. HITL restores explainability, auditability, and trust.

Why You Need a Human-in-the-Loop System

Organizations adopt human-in-the-loop systems to ensure AI is:

Accurate and reliable in edge cases

Ethical and unbiased through human review

Transparent and explainable for compliance

Adaptable through continuous feedback

Aligned with domain expertise

Human judgment fills the gaps automation cannot.

When Human-in-the-Loop Is Essential

HITL is critical in areas such as:

Finance and fraud prevention

Legal and document processing

Customer service escalation

Content moderation

Autonomous systems

Data annotation and model training

In these environments, removing humans is not efficiency. It is liability.

Human-in-the-Loop vs Fully Automated AI

Aspect | Human-in-the-Loop | Fully Automated AI |

|---|---|---|

Accuracy | High, especially in edge cases | Strong on familiar patterns |

Risk | Reduced through live oversight | Higher systemic risk |

Scalability | Limited by human bandwidth | Massive at scale |

Cost | Higher upfront with stronger ROI | Cheaper for routine tasks |

Accountability | Clear human ownership | Often opaque |

Compliance | Audit-ready | Requires heavy instrumentation |

Is Human-in-the-Loop the Future of AI?

Yes, and increasingly, it is unavoidable.

As AI systems influence critical decisions, regulations, ethics, and public trust demand human oversight. Human-in-the-loop is not a fallback. It is the operating model for responsible AI.

The future belongs to systems where:

AI handles scale

Humans guide judgment

Together, they deliver outcomes neither could achieve alone.

In production environments, especially among teams implementing human-in-the-loop systems at scale, HITL is rarely optional. In regulated industries such as healthcare, finance, and enterprise AI, human oversight is essential for compliance, auditability, and accountability.

Frequently Asked Questions (FAQs)

What is the difference between HITL and automation?

Automation executes tasks without human judgment, while human-in-the-loop systems embed human review and control at critical decision points to manage risk, context, and accountability.

Is human-in-the-loop expensive?

Human-in-the-loop adds operational cost, but it often reduces total business risk by preventing costly AI errors, regulatory violations, and reputational damage.

When should you avoid human-in-the-loop?

You should avoid HITL for low-risk, repetitive, and well-defined tasks where errors are inexpensive and full automation delivers clear efficiency gains.

What is human-in-the-loop in AI?

Human-in-the-loop integrates humans into AI workflows for training, review, and decision validation.

Is human-in-the-loop necessary for machine learning?

Yes, especially for production ML systems handling complex, real-world data.

How does HITL improve AI accuracy?

Through continuous human feedback, correction of hallucinations, and handling novel or ambiguous scenarios.

What industries rely on HITL systems?

Healthcare, finance, BI, e-commerce, content moderation, and autonomous systems.

Can AI work without human-in-the-loop?

Yes, but only for low-risk, repetitive tasks with limited consequences.